I used to enjoy working at a hobbyist level on microcontroller projects in my free time. Since moving to Sydney (2.5 years ago), I’d not unpacked the electronic components box, mostly because I’ve lacked inspiration about what to build.

Today was my day to re-open the box.

Regardless of the fact I couldn’t think of anything actually good to create, I decided to just send it and build something anyway, set with the following goals:

- Needlessly complex

- Entirely useless

What do I have to work with?

- Battery

- Power supply

- Raspberry pi zero

- Camera

- Small display

Pulling apart old projects and scraping together parts I was able to gather bits for a device capable of taking pictures displaying data and portability.

While looking at the parts inspiration struck.Do you find the task of determining whether or not you’re looking at a horse tiresome?

Then do I ever have the device for you! Introducing: the portable horse detector.

Let me take you on the journey of building a device that can detect whether it’s pointed at a horse.

Step 1: MACHINE LEARNING

For the prestigious task of horse image detection my mind immediately jumped to using a pre-trained image segmenter such as google’s Inception model because training one myself seems like a lot of work. However a raspberry pi zero is an itty bitty computer with only 512 Mb of RAM.

With this little horse power can I even run tensor-flow on it? Turns out there’s something called tensor-flow lite designed for mobile and other devices with little juice.

After a few sudo apt-get’s we were away.

Tensor-flow lite takes slimed down (quantised) versions of existing models which are less resource intensive, which is what makes it capable of running on something as powerful as the first computer I ever owned back in 2000. I gathered some pertained models online and it was time to test them out on a few horse pics to see the results:

- Inception v2 is capable of detecting 712 objects, some as niche as ‘digital clock’.

- MobileNet (another model) is capable of detecting 1001 objects.

- Both have labels encompassing numerous specific breeds of dog.

Turns out neither of them can detect a horse.

Instead I was often told the images contained a ‘Mexican hairless’. Or grass (to be fair most horse images contain grass).

Does this look like a horse to you?

So my only option was to retrain with a new horse based dataset. Thankfully I can utilise something called transfer learning to build on top of existing models such as the ones I used above.

Step 2: Finding bulk horse pictures online

Surprisingly easy thanks to extensive datasets at kaggle.com.

There was a slight mishap where I accidentally downloaded >2000 (concerning) computer generated horse images, without realising they weren’t actually real horses only to train a model (next step) and have it be total crap. Eventually though I found a real animal dataset to use.

Step 3 MACHINE LEARNING AGAIN

As only one who has no idea what the fuck they’re doing can, I burned numerous hours hosting horse datasets from S3, retraining layers on existing models in a Google Colab notebook I borrowed and tested tentative models on the device.

The models worked well before I quantised and exported them.

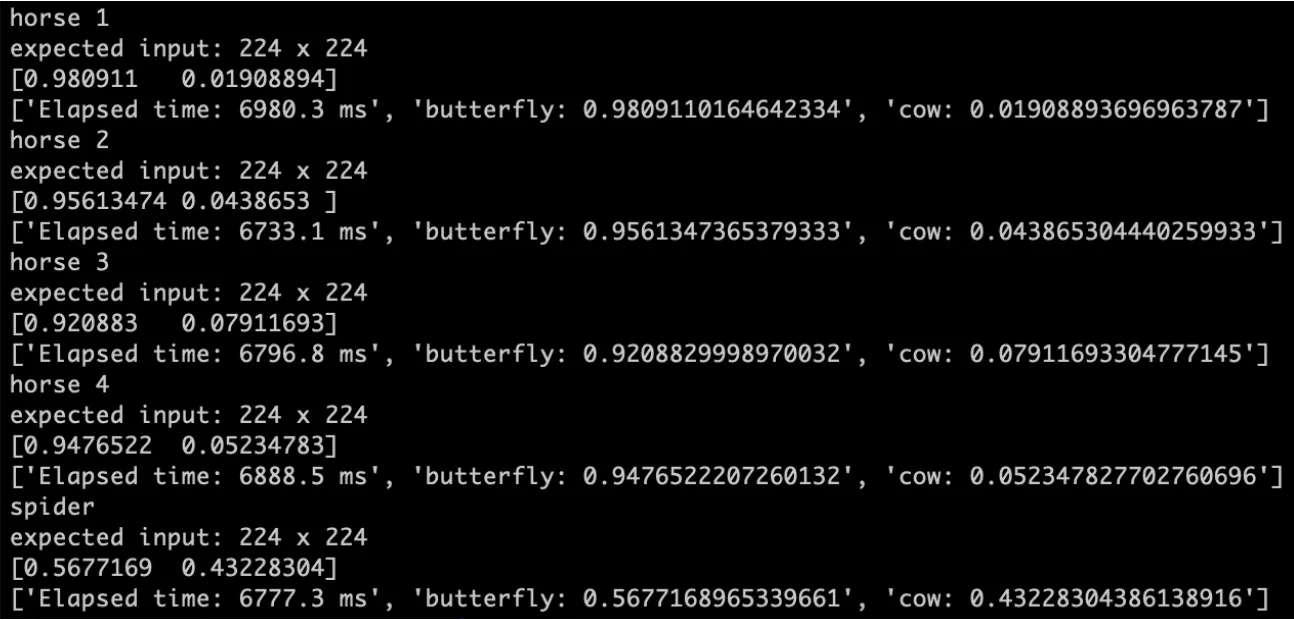

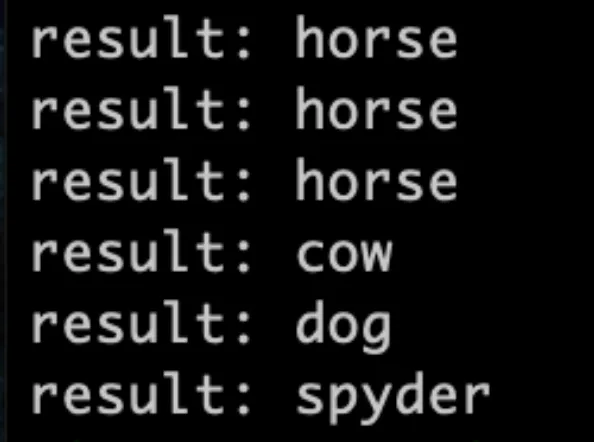

No matter what, I kept getting the same results:

Finally, I grabbed some new example code from github and started from scratch, deciding that it wasn’t the model but my code that was the problem.

And, yep, that was it. The code for resizing the images on the way in (for inference) was the thing screwing it all up and now we’re not horsing around:

(Yes I know spider is spelled with an ‘i’. This was just how the dataset was labeled.)

Step 4: Sub par soldering and electrical tape

Wiring up the device was straight forward enough. The count of impromptu revisits to Jaycar stands at 0, which is a sure indicator for likelihood of success.

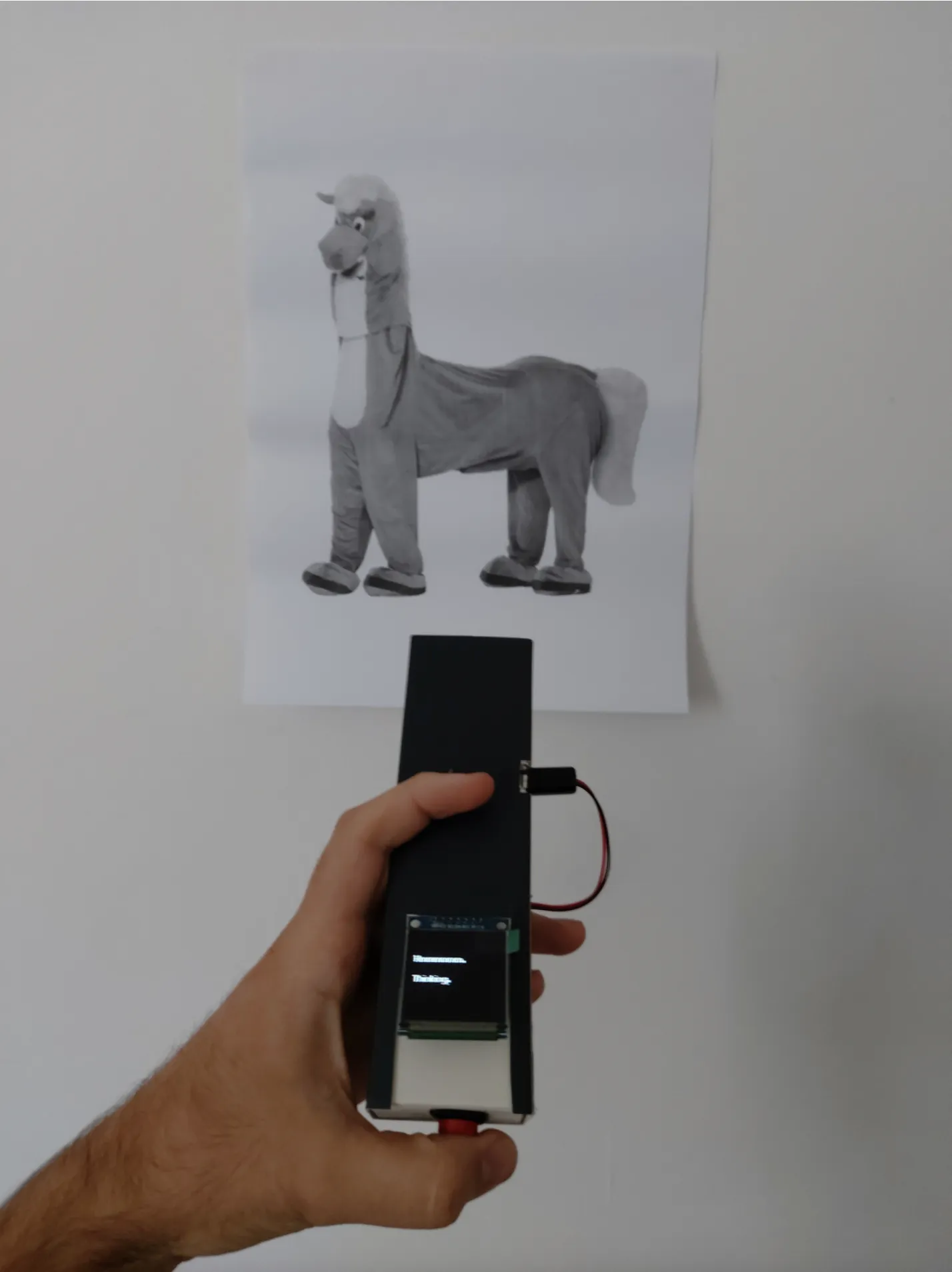

I used an old pen cardboard box as a housing for the device as I don’t own a 3D printer anymore.

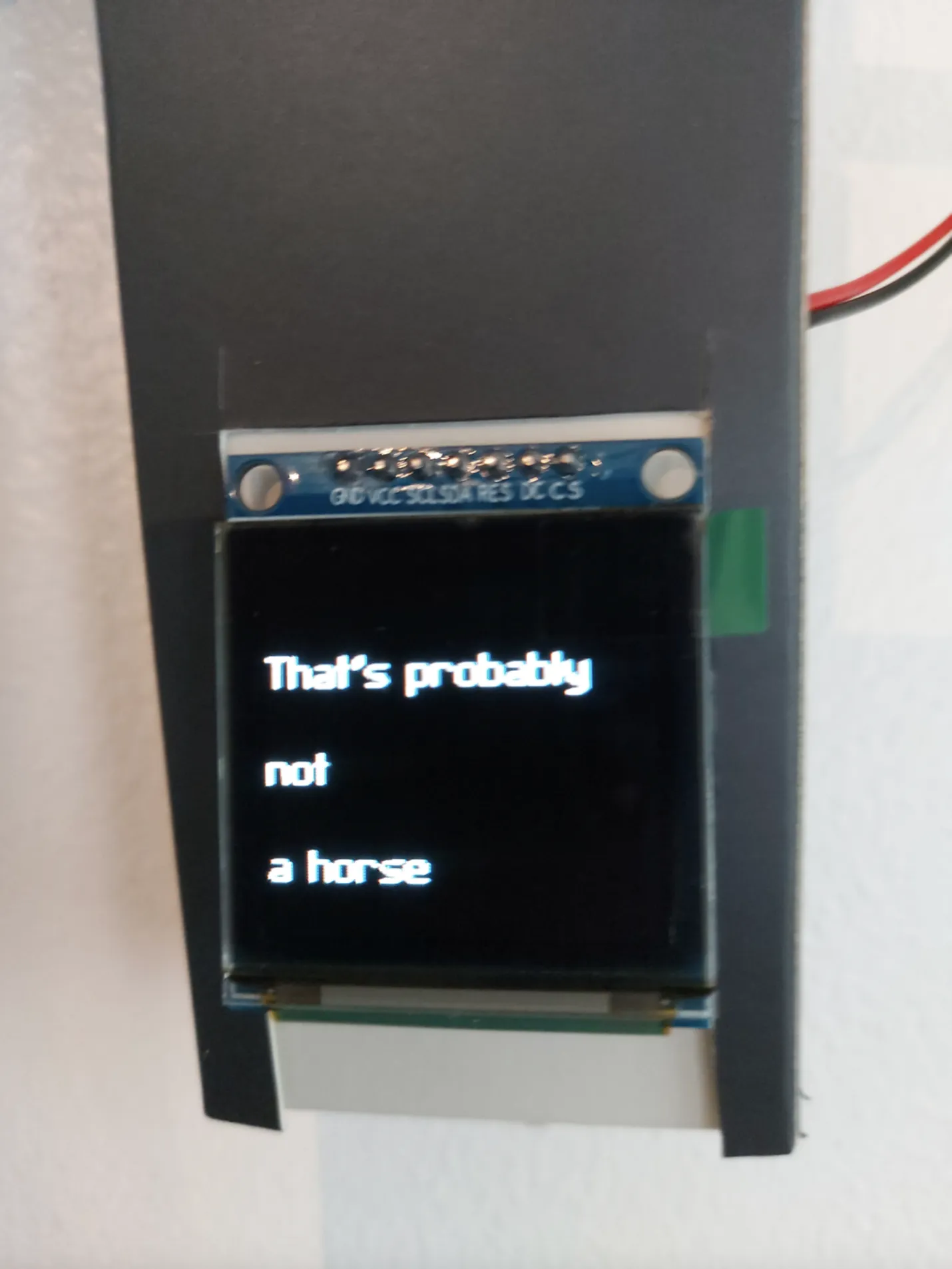

Finally, the device the world needs

Step 5: time to test it out

You can lead a horse to water but you can’t make it drink.

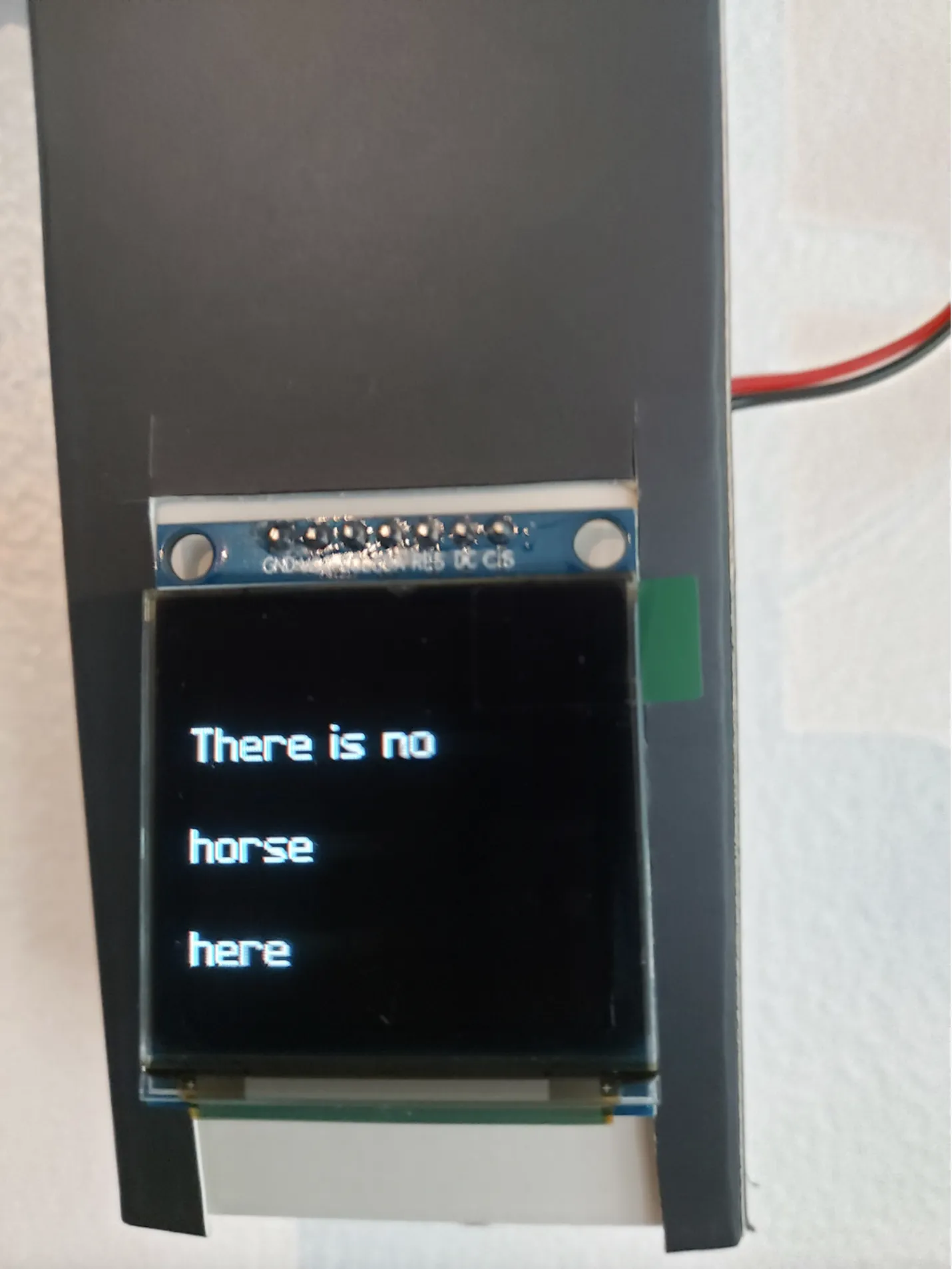

This wasn’t the expected result.

Correct.

Did I go to office works just to print out horse pictures for testing?

Yes.

Did they really need to be A3 size? Yes.

Further testing revealed that the device is extremely ineffective and inaccurate.

I discovered this may be due to the fact that the camera has no IR filter - giving images an eery purplish and over exposed tinge.

Performance benchmarking showed that the device takes around 2 minutes to start up. Once going though it only takes maximum 40 seconds to incorrectly detect a horse . That’s a throughput of 90 incorrect horses per hour.

Conclusions:

Whilst in its infancy this device could be revolutionary.

If you’re allergic to, or have a well justified crippling fear of horses this could be a serious game changer. As long as the horse is stationary, or slow moving.

Furthermore I think the fact that the camera is missing an IR filter is actually a feature, now the horse detector can more easily improperly detect horses in low light conditions.

Where to next is anyone’s guess really.